Basic Probability

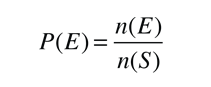

The probability for a given event can be thought of as the ratio of the number of ways that event can happen divided by the number of ways that any possible outcome could happen. The concept of probability is one of the foundations of general statistics. The likelihood of a particular outcome among the set of possible outcomes is expressed by a number from 0 to 1, with 0 representing an impossible outcome and 1 representing the absolute certainty of that outcome. If we identify the set of all possible outcomes as the "sample space" and denote it by S, and label the desired event as E, then the probability for event E can be written

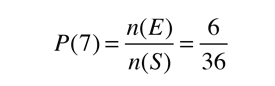

In the probability of a throw of a pair of dice, bet on the number 7 since it is the most probable. There are six ways to throw a 7, out of 36 possible outcomes for a throw. The probability is then

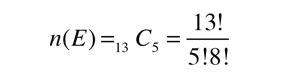

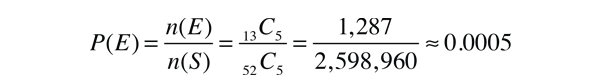

The idea of an "event" is a very general one. Suppose you draw five cards from a standard deck of 52 playing cards, and you want to calculate the probability that all five cards are hearts. This desired event brings in the idea of a combination. The number of ways you can pick five hearts, without regard to which hearts or which order, is given by the combination

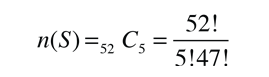

The same basic probability expression is used, but it takes the form

So drawing a five-card hand of a single selected suit is a rare event with a probability of about one in 2000.

If you want the probabability that any one of a number of disjoint events will occur, the probabilities of the single events can be added. For example, the probability of drawing five cards of any one suit is the sum of four equal probabilities, and four times as likely. In boolean language, if the events are related by a logical OR, then the probabilities add. The probability of 5 hearts OR 5 clubs OR 5 diamonds OR 5 spades is approximately .002 or 1 out of 500, four times the probability of drawing 5 hearts.

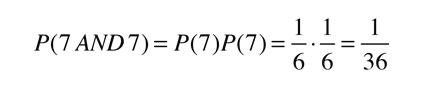

If the events are related by a logical AND, the resultant probability is the product of the individual probabilities. If you want the probability of throwing a 7 with a pair of dice AND throwing another 7 on the second throw, then the probability would be the product

A common misconception about probability is that being "lucky" once will lower the probability that you will be "lucky" again. Probability has no causative effect, so prior events have no influence on the probability for future events. For example:

- Probability of throwing a "2" with a single die: 1/6

- Probability of throwing "2" twice in a row, "2" AND "2": 1/6 x 1/6=1/36

- Probability of throwing a "2" on the next throw: 1/6

The "lucky" circumstance of throwing two "2"s in a row does not make it less likely that you will throw a "2" on the next throw. It is still 1/6.

The expression for probability must be such that the addition of the probabilities for all events must be 1. Constraining the sum of all the probabilities to be 1 is called "normalization". When you calculate the probability by direct counting processes like those discussed above, then the probabilities are always normalized. But when you develop expressions for the probability of events in nature, you must make sure that your probability expression is normalized.

| The probability distribution function |

Statistics concepts

| HyperPhysics*****HyperMath | R Nave |